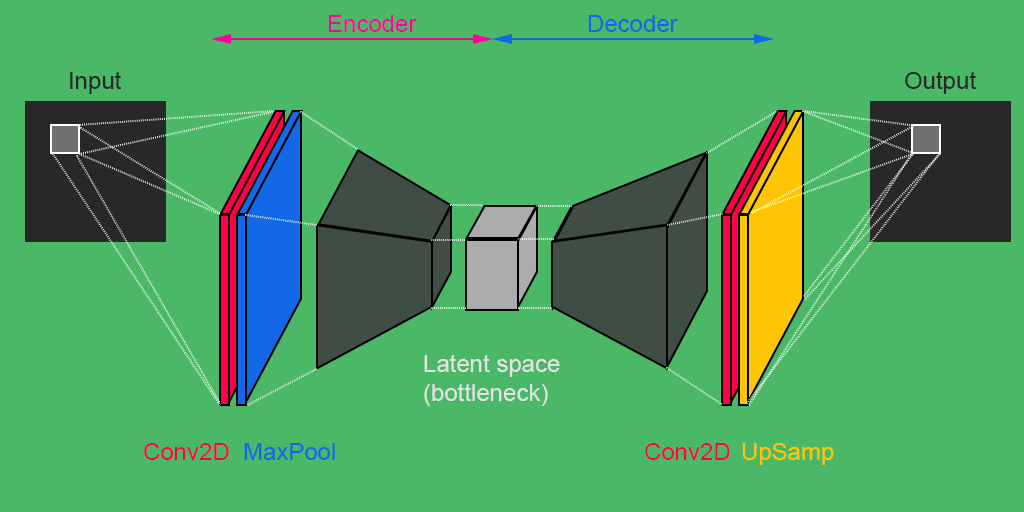

Model: "Convolutional-Autoencoder"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 28, 28, 16) 160

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 14, 14, 16) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 14, 14, 8) 1160

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 7, 7, 8) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 7, 7, 3) 219

_________________________________________________________________

input_2 (InputLayer) multiple 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 7, 7, 8) 224

_________________________________________________________________

up_sampling2d (UpSampling2D) (None, 14, 14, 8) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 14, 14, 16) 1168

_________________________________________________________________

up_sampling2d_1 (UpSampling2 (None, 28, 28, 16) 0

_________________________________________________________________

conv2d_5 (Conv2D) (None, 28, 28, 1) 145

=================================================================

Total params: 3,076

Trainable params: 3,076

Non-trainable params: 0

_________________________________________________________________